Human-Robot Interaction for Symbiotic Robots in a Public Space in a City

Introduction

Nowadays, robots have become able to execute various tasks, and it is expected that more and more jobs will be automated through Artificial Intelligence, and in our future daily lives, we can expect to see robots assuming the role of shopkeepers, watchmen or receptionists.

However, beyond the simple goal of accomplishing tasks, the presence and behavior of human employees in a public place protect the standard of civility of the surroundings. Without this, the people’s morals will degrade, and the number of incivilities will grow: we will witness more and more bullying, littering, illegal parking, graffiti, and so forth as morals fade.

Politeness, enjoyable ambiance, and peer pressure are fundamental to protect the moral level of a place. If robots are to carry out these jobs, it is crucial that they undertake the moral interactions required for keeping the moral level high.

It has been observed that current robots, unlike humans, are not seen as a moral entity, and as such, they are neither granted respect nor is their presence providing any moral pressure. Our project is to tackle this problem.

Project presentation video

Research approach

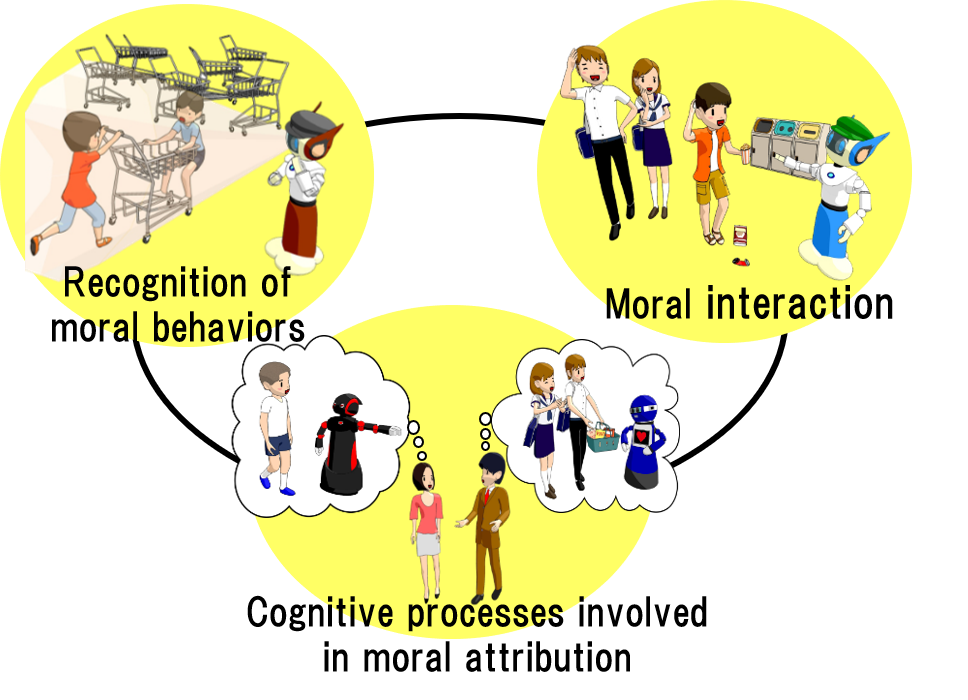

Our research carries two goals:

– Moral attribution: create a situation where the robot is respected as a peer.

– Moral encouragement: the robot, by its presence, should promote moral behavior.

To fulfil those goals, our approach is to perform field experiments while focusing on three points:

– Observe a large number of moral behaviors and become able to automatically recognize them;

– Develop a robot with a human-like sense of moral, that can execute moral interactions;

– Explain the causes and circumstances of low morals based on real-world data, and unveil the cognitive processes involved in morals.

Published studies

Below is a list of the research publications that were achieved under this project.

Recognition of Rare Low-Moral Actions Using Depth Data

Kanghui Du, Thomas Kaczmarek, Dražen Brščić, Takayuki Kanda, Sensors, 2020

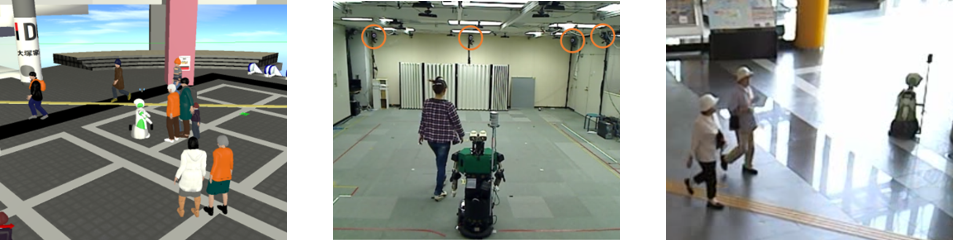

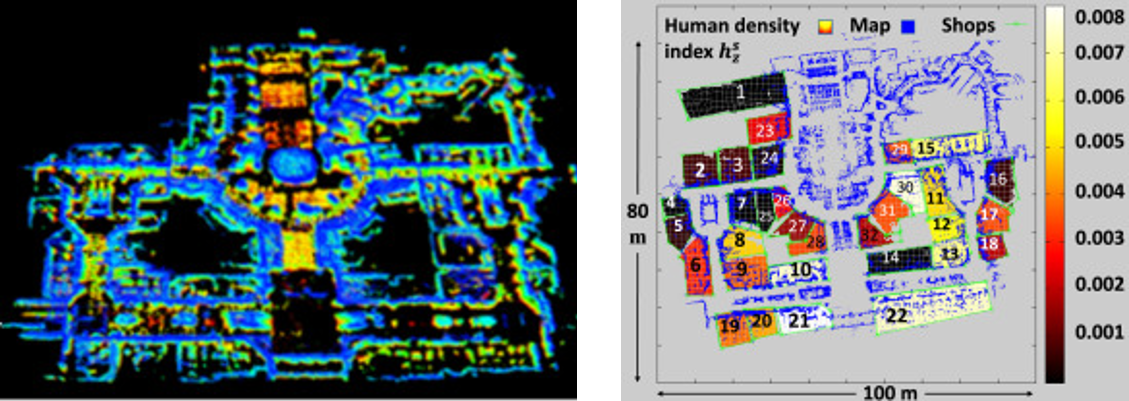

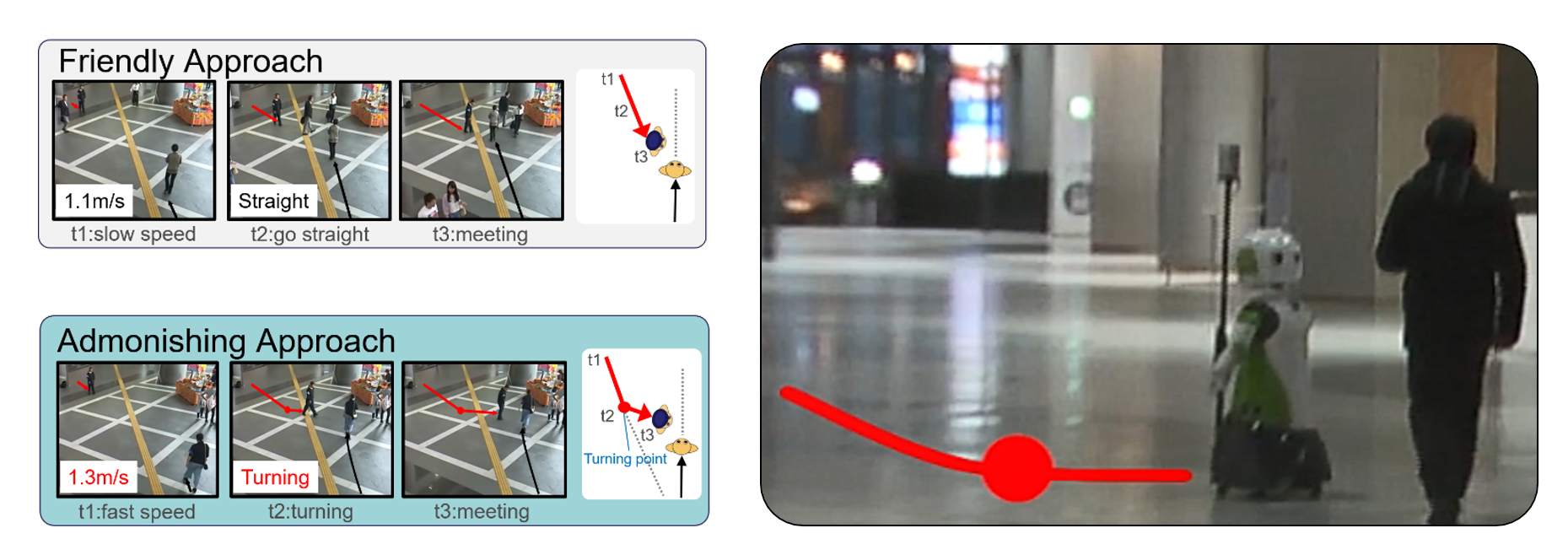

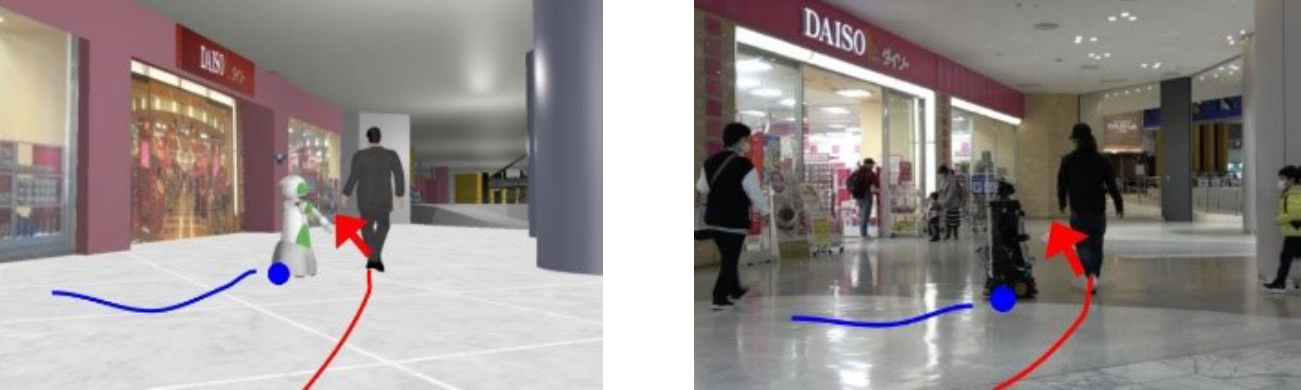

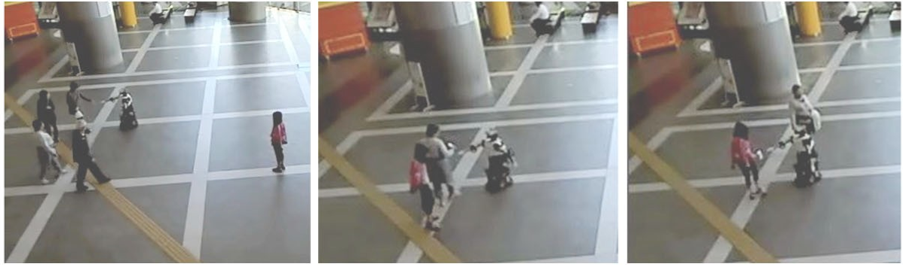

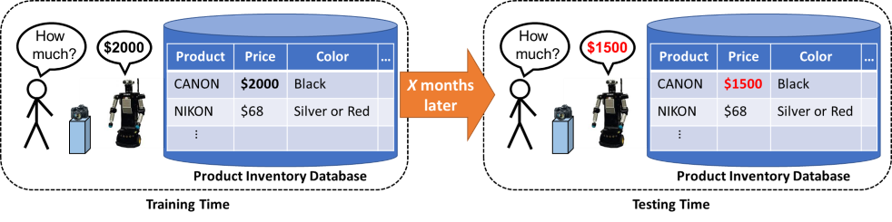

Read more... Publication: Kanghui Du, Thomas Kaczmarek, Dražen Brščić, Takayuki Kanda. Recognition of Rare Low-Moral Actions Using Depth Data, Sensors 2020, Volume 20, Issue 10, Article number 2758, 2020.05.12 https://doi.org/10.3390/s20102758 Abstract: Detecting and recognizing low-moral actions in public spaces is important. But low-moral actions are rare, so in order to learn to recognize a new low-moral action in general we need to rely on a limited number of samples. In order to study the recognition of actions from a comparatively small dataset, in this work we introduced a new dataset of human actions consisting in large part of low-moral behaviors. In addition, we used this dataset to test the performance of a number of classifiers, which used either depth data or extracted skeletons. The results show that both depth data and skeleton based classifiers were able to achieve similar classification accuracy on this dataset (Top-1: around 55%, Top-5: around 90%). Also, using transfer learning in both cases improved the performance. Read more... Publication: Dražen Brščić, Rhys Wyn Evans, Matthias Rehm, Takayuki Kanda. Using a Rotating 3D LiDAR on a Mobile Robot for Estimation of Person’s Body Angle and Gender, Sensors 2020, Volume 20, Issue 14, Article number 3964, 2020.07.16 https://doi.org/10.3390/s20143964 Abstract: We studied the use of a rotating multi-layer 3D Light Detection And Ranging (LiDAR) sensor (specifically the Velodyne HDL-32E) mounted on a social robot for the estimation of features of people around the robot. While LiDARs are often used for robot self-localization and people tracking, we were interested in the possibility of using them to estimate the people’s features (states or attributes), which are important in human–robot interaction. In particular, we tested the estimation of the person’s body orientation and their gender. As collecting data in the real world and labeling them is laborious and time consuming, we also looked into other ways for obtaining data for training the estimators: using simulations, or using LiDAR data collected in the lab. We trained convolutional neural network-based estimators and tested their performance on actual LiDAR measurements of people in a public space. The results show that with a rotating 3D LiDAR a usable estimate of the body angle can indeed be achieved (mean absolute error 33.5∘), and that using simulated data for training the estimators is effective. For estimating gender, the results are satisfactory (accuracy above 80%) when the person is close enough; however, simulated data do not work well and training needs to be done on actual people measurements. Read more... Publication: Koki Makita, Dražen Brščić, Takayuki Kanda. Recognition of Human Characteristics Using Multiple Mobile Robots with 3D LiDARs, 2021 IEEE/SICE International Symposium on System Integration (SII) Proceedings, pp. 650-655, 2021.01 https://doi.org/10.1109/IEEECONF49454.2021.9382640 Abstract: Mobile robots are gradually entering our human spaces. Apart from being able to accurately localize themselves, in populated environments robots also need to detect humans and recognize their characteristics. In this work we focused on using 3D LiDARs for sensing, and in particular on the question whether they can be used to classify characteristics of people around the robot. Moreover, since using sensors from multiple robots is expected to give more accurate recognition, we compared several ways how to combine multiple 3D LiDARs. We evaluated these methods using simulator data as well as actual real-world data. For the real-world data we created a novel dataset taken with multiple robots equipped with 3D LiDARs in a shopping center, which also includes manually labeled characteristics of the detected pedestrians. The results show that combining several 3D LiDARs makes the recognition more accurate. However, we were not able to achieve satisfactory accuracy with real-world data. Read more... Publication: Deneth Karunarathne, Yoichi Morales, Takayuki Kanda, Hiroshi Ishiguro. Understanding a public environment via continuous robot observations, Robotics and Autonomous Systems, Vol. 126. Issue C, Apr 2020 https://doi.org/10.1016/j.robot.2020.103443 Abstract: This paper presents a study on a point cloud analysis captured by a robot navigating in a shopping mall environment. It investigates the type and how much information the robot could extract from the environment. For this purpose, information regarding environmental changes and the number of people in shops was extracted and analyzed. First, the robot was manually controlled to collect data in a typical shopping mall having different types of shops and a food court. As the robot navigated thoroughly around the environment, seven data recordings of data obtained from various onboard sensors were recorded during afternoon hours over three consecutive days. We built a composite map by overlaying 3D point clouds for each recording sharing the same coordinate frame, which reveals the changes in the environment’s static objects. The number of humans at each shop in each recording was computed using a human tracker. Then, we computed a fourteen-dimensional vector for each shop: seven dimensions for environmental changes and seven for human density. Experimental results show that the environmental changes and the human density at each shop are consistent with the visual changes that occurred in the shops and the number of people who visited the shops. Correlation analysis was done among shop changes, shop open space, and human density where results suggest that change in shop configurations are often done in smaller shops and shops with larger open space tend to attract larger number of customers. Finally, information extracted from shops was used to categorize the shops according to similarity. Publication: Jani Even, Satoru Satake, Takayuki Kanda (2019) Monitoring Blind Regions with Prior Knowledge Based Sound Localization. In: Salichs M. et al. (eds) Social Robotics. ICSR 2019. Lecture Notes in Computer Science, vol 11876. Springer, Cham https://doi.org/10.1007/978-3-030-35888-4_64 Abstract: This paper presents a sound localization method designed for dealing with blind regions. The proposed approach mimics Human’s ability of guessing what is happening in the blind regions by using prior knowledge. A user study was conducted to demonstrate the usefulness of the proposed method for human-robot interaction in environments with blind regions. The subjects participated in a shoplifting scenario during which the shop clerk was a robot that has to rely on its hearing to monitor a blind region. The participants understood the enhanced capability of the robot and it favorably affected the rating of the robot using the proposed method. Publication: Carlos T. Ishi, Takayuki Kanda. Prosodic and voice quality analyses of loud speech: differences of hot anger and far-directed speech, Proc. SMM19, Workshop on Speech, Music and Mind 2019, 1-5, DOI: 10.21437/SMM.2019-1 https://doi.org/10.21437/SMM.2019-1 Abstract: In this study, we analyzed the differences in acoustic-prosodic and voice quality features of loud speech in two situations: hot anger (aggressive/frenzy speech) and far-directed speech (i.e., speech addressed to a person in a far distance). Analysis results indicated that both types are accompanied by louder power and higher pitch, while differences were observed in the intonation: far-directed voices tend to have large power and high pitch over the whole utterance, while angry speech has more pitch movements in a larger pitch range. Regarding voice quality, both types tend to be tenser (higher vocal effort), but angry speech tends to be more pressed, with local appearance of harsh voices (with irregularities in the vocal fold vibrations).

Read more... Publication: Risa Maeda, Dražen Brščić, Takayuki Kanda. Influencing Moral Behavior Through Mere Observation of Robot Work: Video-based Survey on Littering Behavior, HRI ’21: Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, pp. 83–91, 2021.03 https://doi.org/10.1145/3434073.3444680 Abstract: Can robots influence the moral behavior of humans by simply doing their job? Robots have been considered as replacement for humans in repetitive jobs in public spaces, but what could that mean for the behavior of the surrounding people is not known. In this work we were interested to see how people change their behavior when they observe either a robot or a person do a morally-laden task. In particular, we studied the influence of seeing a robot or a human picking up and discarding garbage on the observer’s willingness to litter or to pick up garbage. The study was done as a video-based survey. Results show that while observing a person clean up does make people less keen to litter, this effect is not present when people watch a robot doing the same action. Moreover, people appear to feel less guilty about littering if they observed a robot doing the cleaning up than in the case when they watched a human cleaner. Read more... Publication: Daniel J. Rea, Sebastian Schneider, Takayuki Kanda. “Is this all you can do? Harder!”: The Effects of (Im)Polite Robot Encouragement on Exercise Effort, HRI ’21: Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, pp. 225–233, 2021.03 https://doi.org/10.1145/3434073.3444660 Abstract: Most social robot behaviors in human-robot interaction are designed to be polite, but there is little research about how or when a robot could be impolite, and if that may ever be beneficial. We explore the potential benefits and tradeoffs of different politeness levels for human-robot interaction in an exercise context. We designed impolite and polite phrases for a robot exercise trainer and conducted a 24-person experiment where people squat in front of the robot as it uses (im)polite phrases to encourage them. We found participants exercised harder and felt competitive with the impolite robot, while the polite robot was found to be friendly, but sometimes uncompelling and disingenuous. Our work provides evidence that human-robot interaction should continue to aim for more nuanced and complex models of communication. Read more... Publication: Yuma Oda, Jani Even and Takayuki Kanda. Wake up and talk with me! In-the-field study of an autonomous interactive wake up robot, The 12th International Conference on Social Robotics (ICSR 2020) Proceedings, Lecture Notes in Computer Science, vol 12483, pp. 61-72, 2020.11 https://doi.org/10.1007/978-3-030-62056-1_6 Abstract: In this paper, we present a robot that is designed to smoothly wake up a user in the morning. We created an autonomous interactive wake up robot that implements a wake up behavior that was selected through preliminary experiments. We conducted a user study to test the interactive robot and compared it to a baseline robot that behaves like a conventional alarm clock. We recruited 22 participants that agreed to bring the robot to their home and test it for two consecutive nights. The participants felt significantly less sleepy after waking up with the interactive robot, and reported significantly more intention to use the interactive robot. Read more... Publication: Tatsuya Nomura, Takayuki Kanda, Sachie Yamada, Tomohiro Suzuki. The effects of assistive walking robots for health care support on older persons: a preliminary field experiment in an elder care facility, Intelligent Service Robotics (2021) 14(6), pp. 25–32, 2021.01 https://doi.org/10.1007/s11370-020-00345-4 Abstract: In the present research, we prepared a human-size humanoid that autonomously navigates alongside with a walking person. Its utterances were controlled by Wizard-of-Oz method. A field experiment in which older persons walked in an elder care facility together with the robot while it talked to them was conducted to investigate the effects of robot accompaniment on older people requiring health care support in terms of increasing their motivation to engage in physical exercise. The participants (N = 23) were residents in the facility or persons with health problems who had been receiving day care at the facility. The experimental results suggested that (1) the participants enjoyed walking with the robot more than walking alone, (2) the physical burden did not differ between walking styles, and (3) walking with the robot evoked the participants’ perception of novelty or stimulated an existing interest in assistive robotics, both leading to positive feelings. Read more... Publication: Deneth Karunarathne, Yoichi Morales, Takayuki Kanda, Hiroshi Ishiguro. Will Older Adults Accept a Humanoid Robot as a Walking Partner?, International Journal of Social Robotics, 11, 343–358, 2019 https://doi.org/10.1007/s12369-018-0503-6 Abstract: We conducted an empirical study with older adults whose ages ranged from 60 to 73 and compared situations where they walked alone and with a robot. A parameter-based side by side walking model which uses motion, environmental and relative parameters derived from human–human side by side walking was used to navigate the robot autonomously. The model anticipates the motion of both robot and the human partner for motion prediction, uses subgoals on the environment and does not require the final goal of to be known. The participants’ perceptions of ease of walking with/without the robot, enjoyment, and intention to walk with/without the robot were measured. Experimental results revealed that they gave significantly higher ratings to the intention for walking with the robot than walking alone, although no such significance was found in ease of walking with/without the robot or enjoyment. We analyzed the interview results and found that our participants wanted to walk with the robot again because they felt positive about at present and expected it to improve over time. Read more... Publication:Taichi Sono, Satoru Satake, Takayuki Kanda, Michita Imai. Walking partner robot chatting about scenery, Advanced Robotics, 33:15-16, 742-755, 2019 https://doi.org/10.1080/01691864.2019.1610062 Abstract: We believe that many future scenarios will exist where a partner robot will talk with people on walks. To improve the user experience, we aim to endow robots with the capability to select an appropriate conversation topics by allowing them to start chatting about a topic that matches the current scenery and to extend it based on the user’s interest and involvement in it. We implemented a function to compute the similarities between utterances and scenery by comparing their topic vectors. First, we convert the scenery into a list of words by leveraging Google Cloud Vision library. We form a topic vector space with the Latent Dirichlet Allocation method and transform a list of words into a topic vector. Our system uses this function to choose (from an utterance database) the utterance that best matches the current scenery. Then it estimates the user’s level of involvement in the chat with a simple rule based on the length of their responses. If the user is actively involved in the chat topic, the robot continues the current topic using pre-defined derivative utterances. If the user’s involvement sags, the robot selects a new topic based on the current scenery. The topic selection that is based on the current scenery was proposed in our previous work. Our main contribution is the whole chat system, which includes the user’s involvement estimation. We implemented our system with a shoulder-mounted robot and conducted a user study to evaluate its effectiveness. Our experimental results show that users evaluated the robot with the proposed system as a better walking partner than one that randomly chose utterances. Read more... Publication: Ely Repiso, Francesco Zanlungo, Takayuki Kanda, Anaís Garrell, Alberto Sanfeliu. People’s V-Formation and Side-by-Side Model Adapted to Accompany Groups of People by Social Robots, 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2082-2088, 2019 https://doi.org/10.1109/IROS40897.2019.8968601 Abstract: This paper presents a new method to allow robots to accompany a person or a group of people imitating pedestrians behavior. Two-people groups usually walk in a side-by-side formation and three-people groups walk in a V-formation so that they can see each other. For this reason, the proposed method combines a Side-by-side and V-formation pedestrian model with the Anticipative Kinodynamic Planner (AKP). Combining these methods, the robot is able to do an anticipatory accompaniment of groups of humans, as well as to avoid static and dynamic obstacles in advance, while keeping the prescribed formations. The proposed framework allows also a dynamical re-positioning of the robot, if the physical position of the partners change in the group formation. Furthermore, people have a randomness factor that the robot has to manage, for that reason, the system was adapted to deal with changes in people’s velocity, orientation and occlusions. Finally, the method has been validated using synthetic experiments and real-life experiments with our Tibi robot. In addition, a user study has been realized to reveal the social acceptability of the method. Read more... Publication: Deneth Karunarathne, Yoichi Morales, Takayuki Kanda, Hiroshi Ishiguro. Model of Side-by-Side Walking Without the Robot Knowing the Goal, International Journal of Social Robotics, 10, 401–420, 2018 https://doi.org/10.1007/s12369-017-0443-6ACM Abstract: We humans often engage in side-by-side walking even when we do not know where we are going. Replicating this capability in a robot reveals the complications of such daily interactions. We analyzed human–human interactions and found that human pairs sustained a side-by-side walking formation even when one of them (the follower) did not know the destination. When multiple path choices exist, the follower walks slightly behind his partner. We modeled this interaction by assuming that one needs knowledge from the environment, like the locations to which people typically move toward: subgoals. This model enables a robot to switch between two interaction modes; in one mode, it strictly maintains the side-by-side walking formation, and in another it walks slightly behind its partner. We conducted an evaluation experiment in a real shopping arcade and revealed that our model replicates human side-by-side walking better than other simple methods in which the robot simply moves to the side of a person and without the human tendency for choosing the next appropriate subgoals. Read more... Publication: Daichi Morimoto, Jani Even, and Takayuki Kanda. Can a Robot Handle Customers with Unreasonable Complaints? In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’20), March 23–26, 2020, Cambridge, United Kingdom. ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/3319502.3374830 Abstract: In recent years, the service industry faces a rise in the number of malicious customers. Making unreasonable complaint is one misbehavior that is particularly stressful for the workers. Considering the recent push for introducing robots in the working places, a robot should be handling these undesirable customers in place of the workers. This paper shows how we designed a behavioral model that enables a robot to handle a customer making an unreasonable complaint. The robot has to “please the customer” without proposing a settlement. We used information from a recent large survey of workers from the Japanese service industry supplemented by information from interviews we conducted with experienced workers to derive our proposed behavioral model. We identified the conventional complaint handling flow as 1) listening to the complaint, 2) confirm the content of the complaint, 3) apologize, 4) give an explanation and 5) conclude. Our proposed behavioral model takes into account the “state of mind” of the customer by looping on the first step as long as the customer is not “ready to listen”. The robot also asks questions while looping. Using the Wizard-of-Oz paradigm, we conducted a user study in our laboratory that imitates the situation of a complaining customer in a mobile phone shop. The proposed behavioral model was significantly better at making the customers believe that the robot listened to them and tried to help them Read more... Publication: R. Maeda, J. Even and T. Kanda, “Can a Social Robot Encourage Children’s Self-Study?,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 2019, pp. 1236-1242.https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8967825&isnumber=8967518 Abstract: We developed a robot behavioral model designed to support children during self-study. In particular, we want to investigate how a robot could increase the time children keep concentration. The behavioral model was developed by observing children during self-study and by collecting information from experienced tutors through interviews. After observing the children, we decided to consider three states corresponding to different levels of concentration. The child can be smoothly performing the task (“learning” state), encountering some difficulties (“stuck” state) or distracted (“distracted” state). The behavioral model was designed to increase the time spent concentrating on the task by implementing adequate behaviors for each of these three states. These behaviors were designed using the advices collected during the interview survey of the experienced tutors. A self-study system based on the proposed behavior model was implemented. In this system, a small robot sits on the table and encourages the child during self-study. An operator is in charge of determining the state of the child (Wizard of Oz) and the behavioral model triggers the appropriate behaviors for the different states. To demonstrate the effectiveness of the proposed behavioral model, a user study was conducted: 22 children were asked to solve problems alone and to solve problems with the robot. The children spent significantly (p = 0.024) more time in the “learning” state when studying with the robot. Read more... Publication: Jorge Gallego Pérez, Kazuo Hiraki, Yasuhiro Kanakogi, and Takayuki Kanda. 2019. Parent Disciplining Styles to Prevent Children’s Misbehaviors toward a Social Robot. In Proceedings of the 7th International Conference on Human-Agent Interaction (HAI ’19). Association for Computing Machinery, New York, NY, USA, 162–170. DOI:https://doi.org/10.1145/3349537.3351903 Abstract: It has been noticed that children offend tend to interrupt and sometimes bully robots in public space. In a laboratory environment designed to stimulate children’s disruptive behavior, we compared the robot’s use of an adaptation of a parental discipline strategy, the so-called love-withdrawal technique, to a similar set of robot behaviors that lacked any specific strategy (neutral condition). The main insight we gained was that perhaps we should better not focus on general robot behaviors to try to fit all children, but rather, we should adapt the robot behaviors to children’s individual differences. For instance, we found that the love-withdrawal-based strategy was significantly more effective in children of age 8-9 than on children of 7. Read more... Publication: Federico Manzi, Mitsuhiko Ishikawa, Cinzia Di Dio, Shoji Itakura, Takayuki Kanda, Hiroshi Ishiguro, Davide Massaro, Antonella Marchetti. The understanding of congruent and incongruent referential gaze in 17-month-old infants: an eye-tracking study comparing human and robot, Scientific Reports, Vol. 10, Article number 11918, 2020.07.17 https://doi.org/10.1038/s41598-020-69140-6 Abstract: Several studies have shown that the human gaze, but not the robot gaze, has significant effects on infant social cognition and facilitate social engagement. The present study investigates early understanding of the referential nature of gaze by comparing—through the eye-tracking technique—infants’ response to human and robot’s gaze. Data were acquired on thirty-two 17-month-old infants, watching four video clips, where either a human or a humanoid robot performed an action on a target. The agent’s gaze was either turned to the target (congruent) or opposite to it (incongruent). The results generally showed that, independent of the agent, the infants attended longer at the face area compared to the hand and target. Additionally, the effect of referential gaze on infants’ attention to the target was greater when infants watched the human compared to the robot’s action. These results suggest the presence, in infants, of two distinct levels of gaze-following mechanisms: one recognizing the other as a potential interactive partner, the second recognizing partner’s agency. In this study, infants recognized the robot as a potential interactive partner, whereas ascribed agency more readily to the human, thus suggesting that the process of generalizability of gazing behaviour to non-humans is not immediate. Read more... Publication: Ying Wang, Yun-Hee Park, Shoji Itakura, Annette Margaret Elizabeth Henderson, Takayuki Kanda, Naoki Furuhata, Hiroshi Ishiguro. Infants’ perceptions of cooperation between a human and robot, Infant and Child Development, 29:e2161, 2020 https://doi.org/10.1002/icd.2161 Abstract: Cooperation is fundamental to human society; thus, it may come as little surprise that by their second birthdays, infants are able to perceive when two human agents are working together towards a shared goal. However, far less is known about whether infants view nonhuman agents as being capable of cooperative shared goals. Thirteen-month-old infants were habituated to a cooperative interaction involving a human and robot agent as they worked to remove a toy from inside a box. While previous research suggests that infants readily structure the actions of human cooperative partners as being towards a shared goal, surprisingly infants in the current study did not extend their expectations about cooperation when a robot agent was present. These findings contribute to our understanding of the nature of infants’ developing notions of goal-directed behaviour and are the first examination of infants’ perceptions of cooperation involving robotic agents. Read more... Publication: Sachie Yamada, Takayuki Kanda, and Kanako Tomita. 2020. An Escalating Model of Children’s Robot Abuse. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘20), March 23–26, 2020, Cambridge, United Kingdom. ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/3319502.3374833 Abstract: We reveal the process of children engaging in such serious abuse as kicking and punching robots. In study 1, we established a process model of robot abuse and used a qualitative analysis method specialized for time-series data: the Trajectory Equifinality Model (TEM). With the TEM method, we analyzed interactions from nine children who committed serious robot abuse from which we developed a multi-stage model: the abuse escalation model. The model has four stages: approach, mild abuse, physical abuse, and escalation. For each stage, we identified social guides (SGs), which are influencing events that fuel the stage. In study 2, we conducted a quantitative analysis to examine the effect of these SGs. We analyzed 12 hours of data that included 522 children who visited the observed area nearby the robot, coded their behaviors, and statistically tested whether the presence of each SG promoted the stage. Our analysis confirmed the correlations of four SGs and children’s behaviors: the presence of other children related a new child to approach the robot (SG1); mild abuse by another child related a child to do mild abuse (SG2); physical abuse by another child related a child to conduct physical abuse (SG3); and encouragement from others related a child to escalate the abuse (SG5). Read more... Publication: Tatsuya Nomura, Takayuki Kanda, Tomohiro Suzuki, Sachie Yamada. Do people with social anxiety feel anxious about interacting with a robot?, AI & SOCIETY 35, 381–390, 2020 https://doi.org/10.1007/s00146-019-00889-9 Abstract: To investigate whether people with social anxiety have less actual and “anticipatory” anxiety when interacting with a robot compared to interacting with a person, we conducted a 2 × 2 psychological experiment with two factors: social anxiety and interaction partner (a human confederate and a robot). The experiment was conducted in a counseling setting where a participant played the role of a client and the robot or the confederate played the role of a counselor. First, we measured the participants’ social anxiety using the Social Avoidance and Distress Scale, after which, we measured their anxiety at two specific moments: “anticipatory anxiety” was measured after they knew that they would be interacting with a robot or a human confederate, and actual anxiety was measured after they actually interacted with the robot or confederate. Measurements were performed using the Profile of Mood States and the State–Trait Anxiety Inventory. The results indicated that participants with higher social anxiety tended to feel less “anticipatory anxiety” and tension when they knew that they would be interacting with robots compared with humans. Moreover, we found that interaction with a robot elicited less tension compared with interaction with a person regardless of the level of social anxiety. Read more... Publication: Kimmo J. Vänni, Sirpa E. Salin, John-John Cabibihan, Takayuki Kanda. Robostress, a New Approach to Understanding Robot Usage, Technology, and Stress, In: Salichs M. et al. (eds) Social Robotics. ICSR 2019. Lecture Notes in Computer Science, vol 11876. Springer, Cham. https://doi.org/10.1007/978-3-030-35888-4_48 Abstract: Robostress is a user’s perceived or measured stress in relation to the use of interactive physical robots. It is an offshoot from technostress where a user perceives experience of stress when using technologies. We explored robostress and the related variables. The methods consisted of a cross-sectional survey conducted in Finland, Qatar and Japan among university students and staff members (n = 60). The survey data was analyzed with descriptive statistics and a Pearson Correlation Test. The results presented that people perceived stress when or if using the robots and the concept of robostress exists. The reasons for robostress were lack of time and technical knowledge, but the experience of technical devices and applications mitigate robostress. Read more... Publication: Marlena R. Fraune, Selma Šabanović and Takayuki Kanda. Human Group Presence, Group Characteristics, and Group Norms Affect Human-Robot Interaction in Naturalistic Settings, Frontiers in Robotics and AI, 6:48, 2019 https://doi.org/10.3389/frobt.2019.00048 Abstract: As robots become more prevalent in public spaces, such as museums, malls, and schools, they are coming into increasing contact with groups of people, rather than just individuals. Groups, compared to individuals, can differ in robot acceptance based on the mere presence of a group, group characteristics such as entitativity (i.e., cohesiveness), and group social norms; however, group dynamics are seldom studied in relation to robots in naturalistic settings. To examine how these factors affect human-robot interaction, we observed 2,714 people in a Japanese mall receiving directions from the humanoid robot Robovie. Video and survey responses evaluating the interaction indicate that groups, especially entitative groups, interacted more often, for longer, and more positively with the robot than individuals. Participants also followed the social norms of the groups they were part of; participants who would not be expected to interact with the robot based on their individual characteristics were more likely to interact with it if other members of their group did. These results illustrate the importance of taking into account the presence of a group, group characteristics, and group norms when designing robots for successful interactions in naturalistic settings. Read more... Publication: Tatsuya Nomura, Kazuki Otsubo, Takayuki Kanda. Preliminary Investigation of Moral Expansiveness for Robots, 2018 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), pp. 91-96, 2018 https://doi.org/10.1109/ARSO.2018.8625717 Abstract: To clarify whether humans can extend moral care and consideration to robotic entities, a psychological experiment was conducted for twenty-five undergraduate and graduate students in Japan. The experiment consisted of two conditions on a robot’s behavior: relational and non-relational. In the experiment participants interacted with the robot and then they were told that the robot was disposed. It was found that 1) the participants having higher expectation of rapport with the robot showed more moral expansiveness for the robot measured as degrees of reasoning about the robot as having mental states, a social other, and a moral other, in comparison with those having lower expectation, and 2) in the group of the participants having lower expectation of rapport with the robot, those facing to the robot with relational behaviors showed more degrees of reasoning about the robot as a social other in comparison with those facing the robot without these behaviors. Read more... Publication: Ryo Kitagawa, Yuyi Liu, Takayuki Kanda. Human-inspired Motion Planning for Omni-directional Social Robots, HRI ’21: Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, pp. 34–42, 2021.03 https://doi.org/10.1145/3434073.3444679 Abstract: Omni-directional robots have gradually been popular for social interactions with people in human environments. The characteristics of omni-directional bases allow the robots to change their body orientation freely while moving straight. However, human spectators show dislike when observing robots behave unnaturally. In this paper, we observed how humans naturally move to goals and then developed a motion planning algorithm for omni-directional robots to resemble human movements in a time-efficient manner. Instead of treating the translation and rotation of a robot separately, the proposed motion planner couples the two motions with constraints inspired from the observation of human behaviors. We implemented the proposed method onto an omni-directional robot and conducted navigation experiments in a shop with shelves and narrow corridors at width of 90cm. Results from a within-participants study of 300 human spectators validated that the proposed human-inspired motion planner provided people with more natural and predictable feelings compared to the common rotate-while-move or rotate-then-move strategies. Read more... Publication: Emmanuel Senft, Satoru Satake, Takayuki Kanda. Would You Mind Me if I Pass by You? Socially-Appropriate Behaviour for an Omni-based Social Robot in Narrow Environment, In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’20), March 23–26, 2020, Cambridge, United Kingdom. ACM, New York, NY, USA, pp. 539-547. https://doi.org/10.1145/3319502.3374812 Abstract: Interacting physically with robots and sharing environment with them leads to situations where humans and robots have to cross each other in narrow corridors. In these cases, the robot has to make space for the human to pass. From observation of human-human crossing behaviours, we isolated two main factors in this avoiding behaviour: body rotation and sliding motion. We implemented a robot controller able to vary these factors and explored how this variation impacted on people’s perception. Results from a within-participants study involving 23 participants show that people prefer a robot rotating its body when crossing them. Additionally, a sliding motion is rated as being warmer. These results show the importance of social avoidance when interacting with humans.Interacting physically with robots and sharing environment with them leads to situations where humans and robots have to cross each other in narrow corridors. In these cases, the robot has to make space for the human to pass. From observation of human-human crossing behaviours, we isolated two main factors in this avoiding behaviour: body rotation and sliding motion. We implemented a robot controller able to vary these factors and explored how this variation impacted on people’s perception. Results from a within-participants study involving 23 participants show that people prefer a robot rotating its body when crossing them. Additionally, a sliding motion is rated as being warmer. These results show the importance of social avoidance when interacting with humans. Publication: Mizumaru, K., Satake, S., Kanda, T., & Ono, T. (2019). Stop Doing it! Approaching Strategy for a Robot to Admonish Pedestrians. 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 449-457. Link: https://dl.acm.org/doi/abs/10.5555/3378680.3378756 Abstract: We modeled a robot’s approaching behavior for giving admonishment. We started by analyzing human behaviors. We conducted a data collection in which a guard approached others in two ways: 1) for admonishment, and 2) for a friendly purpose. We analyzed the difference between the admonishing approach and the friendly approach. The approaching trajectories in the two approaching types are similar; nevertheless, there are two subtle differences. First, the admonishing approach is slightly faster (1.3 m/sec) than the friendly approach (1.1 m/sec). Second, at the end of the approach, there is a ‘shortcut’ in the trajectory. We implemented this model of the admonishing approach into a robot. Finally, we conducted a field experiment to verify the effectiveness of the model. A robot is used to admonish people who were using a smartphone while walking. The result shows that significantly more people yield to admonishment from a robot using the proposed method than from a robot using the friendly approach method Read more... Publication: Yuya Kaneshige, Satoru Satake, Takayuki Kanda, Michita Imai. How to Overcome the Difficulties in Programming and Debugging Mobile Social Robots?, HRI ’21: Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, pp. 361–369, 2021.03 https://doi.org/10.1145/3434073.3444674 Abstract: We studied the programming and debugging processes of an autonomous mobile social robot with a focus on the programmers. This process is time-consuming in a populated environment where a mobile social robot is designed to interact with real pedestrians. From our observations, we identified two types of time-wasting behaviors among programmers: cherry-picking and a shortage of coverage in their testing. We developed a new tool, a test generator framework, to help avoid these testing time-wasters. This framework generates new testing scenarios to be used in a simulator by blending a user-prepared test with pre-stored pedestrian patterns. Finally, we conducted a user study to verify the effects of our test generator. The results showed that our test generator significantly reduced the programming and debugging time needed for autonomous mobile social robots. Read more... Publication: Chao Shi, Satoru Satake, Takayuki Kanda, Hiroshi Ishiguro. A Robot that Distributes Flyers to Pedestrians in a Shopping Mall, International Journal of Social Robotics, Vol. 10, pp. 421–437, 2018 https://doi.org/10.1007/s12369-017-0442-7 Abstract: This paper reports our research on developing a robot that distributes flyers to pedestrians. The difficulty is that since the potential receivers are pedestrians who are not necessarily cooperative, the robot needs to appropriately plan its motions, making it easy and non-obstructive for the potential receivers to accept the flyers. We analyzed peoples distributing behavior in a real shopping mall and found that successful distributors approach pedestrians from the front and only extend their arms near the target pedestrian. We also found that pedestrians tend to accept flyers if previous pedestrians took them. Based on these analyses, we developed a behavior model of the robots behavior, implemented it in a humanoid robot, and confirmed its effectiveness in a field experiment. Read more... Publication: Malcolm Doering, Dražen Brščić, Takayuki Kanda. Data-driven Imitation Learning for a Shopkeeper Robot with Periodically Changing Product Information, ACM Transactions on Human-Robot Interaction (THRI), (in press) Abstract: Data-driven imitation learning enables service robots to learn social interaction behaviors, but these systems cannot adapt after training to changes in the environment, e.g. changing products in a store. To solve this, a novel learning system that uses neural attention and approximate string matching to copy information from a product information database to its output is proposed. A camera shop interaction dataset was simulated for training/testing. The proposed system was found to outperform a baseline and a previous state-of-the-art in an offline, human-judged evaluation. Read more... Publication: Amal Nanavati, Malcolm Doering, Dražen Brščić, and Takayuki Kanda. 2020. Autonomously Learning One-To-Many Social Interaction Logic from Human-Human Interaction Data. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (HRI’20), March 23-26,2020, Cambridge, United Kingdom. ACM, New York, NY, USA, 9 pages. Abstract: We envision a future where service robots autonomously learn how to interact with humans directly from human-human interaction data, without any manual intervention. In this paper, we present a data-driven pipeline that: (1) takes in low-level data of a human shopkeeper interacting with multiple customers (28 hours of collected data); (2) autonomously extracts high-level actions from that data; and (3) learns – without manual intervention – how a robotic shopkeeper should respond to customers’ actions online. Our proposed system for learning the interaction logic uses neural networks to first learn which customer actions are important to respond to and then learn how the shopkeeper should respond to those important customer actions. We present a novel technique for learning which customer actions are important by first learning the hidden causal relationship between customer and shopkeeper actions. In an offline evaluation, we show that our proposed technique significantly outperforms state-of-the-art baselines, in both which customer actions are important and how to respond to them. Read more... Publication: Malcolm Doering, Takayuki Kanda, Hiroshi Ishiguro. Neural-network-based Memory for a Social Robot: Learning a Memory Model of Human Behavior from Data, ACM Transactions on Human-Robot Interaction, 8(9), Art. No. 19, pp 1–27, 2019 https://doi.org/10.1145/3338810 Abstract: Many recent studies have shown that behaviors and interaction logic for social robots can be learned automatically from natural examples of human-human interaction by machine learning algorithms, with minimal input from human designers [1–4]. In this work, we exceed the capabilities of the previous approaches by giving the robot memory. In earlier work, the robot’s actions were decided based only on a narrow temporal window of the current interaction context. However, human behaviors often depend on more temporally distant events in the interaction history. Thus, we raise the question of whether (and how) an automated behavior learning system can learn a memory representation of interaction history within a simulated camera shop scenario. An analysis of the types of memory-setting and memory-dependent actions that occur in the camera shop scenario is presented. Then, to create more examples of such actions for evaluating a shopkeeper robot behavior learning system, an interaction dataset is simulated. A Gated Recurrent Unit (GRU) neural network architecture is applied in the behavior learning system, which learns a memory representation for performing memory-dependent actions. In an offline evaluation, the GRU system significantly outperformed a without-memory baseline system at generating appropriate memory-dependent actions. Finally, an analysis of the GRU architecture’s memory representation is presented. Read more... Publication: Malcolm Doering, Phoebe Liu, Dylan F. Glas, Takayuki Kanda, Dana Kulić, Hiroshi Ishiguro. Curiosity Did Not Kill the Robot: A Curiosity-based Learning System for a Shopkeeper Robot, ACM Transactions on Human-Robot Interaction, 8(3), Art. No. 15, pp 1–24, 2019 https://doi.org/10.1145/3326462 Abstract: Learning from human interaction data is a promising approach for developing robot interaction logic, but behaviors learned only from offline data simply represent the most frequent interaction patterns in the training data, without any adaptation for individual differences. We developed a robot that incorporates both data-driven and interactive learning. Our robot first learns high-level dialog and spatial behavior patterns from offline examples of human–human interaction. Then, during live interactions, it chooses among appropriate actions according to its curiosity about the customer’s expected behavior, continually updating its predictive model to learn and adapt to each individual. In a user study, we found that participants thought the curious robot was significantly more humanlike with respect to repetitiveness and diversity of behavior, more interesting, and better overall in comparison to a non-curious robot. Read more... Publication: Yucel Z, Zanlungo F, Feliciani C, Gregorj A, Kanda T (2019) Identification of social relation within pedestrian dyads. PLoS ONE 14(10): e0223656. https://doi.org/10.1371/journal.pone.0223656 Abstract: This study focuses on social pedestrian groups in public spaces and makes an effort to identify the type of social relation between the group members. As a first step for this identification problem, we focus on dyads (i.e. 2 people groups). Moreover, as a mutually exclusive categorization of social relations, we consider the domain-based approach of Bugental, which precisely corresponds to social relations of colleagues, couples, friends and families, and identify each dyad with one of those relations. For this purpose, we use anonymized trajectory data and derive a set of observables thereof, namely, inter-personal distance, group velocity, velocity difference and height difference. Subsequently, we use the probability density functions (pdf) of these observables as a tool to understand the nature of the relation between pedestrians. To that end, we propose different ways of using the pdfs. Namely, we introduce a probabilistic Bayesian approach and contrast it to a functional metric one and evaluate the performance of both methods with appropriate assessment measures. This study stands out as the first attempt to automatically recognize social relation between pedestrian groups. Additionally, in doing that it uses completely anonymous data and proves that social relation is still possible to recognize with a good accuracy without invading privacy. In particular, our findings indicate that significant recognition rates can be attained for certain categories and with certain methods. Specifically, we show that a very good recognition rate is achieved in distinguishing colleagues from leisure-oriented dyads (families, couples and friends), whereas the distinction between the leisure-oriented dyads results to be inherently harder, but still possible at reasonable rates, in particular if families are restricted to parent-child groups. In general, we establish that the Bayesian method outperforms the functional metric one due, probably, to the difficulty of the latter to learn observable pdfs from individual trajectories. Read more... Publication: Zanlungo F, Yücel Z, Kanda T (2019) Intrinsic group behaviour II: On the dependence of triad spatial dynamics on social and personal features; and on the effect of social interaction on small group dynamics. PLoS ONE 14(12): e0225704. https://doi.org/10.1371/journal.pone.0225704 Abstract: In a follow-up to our work on the dependence of walking dyad dynamics on intrinsic properties of the group, we now analyse how these properties affect groups of three people (triads), taking also in consideration the effect of social interaction on the dynamical properties of the group. We show that there is a strong parallel between triads and dyads. Work-oriented groups are faster and walk at a larger distance between them than leisure-oriented ones, while the latter move in a less ordered way. Such differences are present also when colleagues are contrasted with friends and families; nevertheless the similarity between friend and colleague behaviour is greater than the one between family and colleague behaviour. Male triads walk faster than triads including females, males keep a larger distance than females, and same gender groups are more ordered than mixed ones. Groups including tall people walk faster, while those with elderly or children walk at a slower pace. Groups including children move in a less ordered fashion. Results concerning relation and gender are particularly strong, and we investigated whether they hold also when other properties are kept fixed. While this is clearly true for relation, patterns relating gender often resulted to be diminished. For instance, the velocity difference due to gender is reduced if we compare only triads in the colleague relation. The effects on group dynamics due to intrinsic properties are present regardless of social interaction, but socially interacting groups are found to walk in a more ordered way. This has an opposite effect on the space occupied by non-interacting dyads and triads, since loss of structure makes dyads larger, but causes triads to lose their characteristic V formation and walk in a line (i.e., occupying more space in the direction of movement but less space in the orthogonal one).

Using a Rotating 3D LiDAR on a Mobile Robot for Estimation of Person’s Body Angle and Gender

Dražen Brščić, Rhys Wyn Evans, Matthias Rehm, Takayuki Kanda, Sensors, 2020

Recognition of Human Characteristics Using Multiple Mobile Robots with 3D LiDARs

Koki Makita, Dražen Brščić, Takayuki Kanda, SII 2021

Understanding a public environment via continuous robot observations

Deneth Karunarathne, Yoichi Morales, Takayuki Kanda, Hiroshi Ishiguro, RAS 2020

Monitoring Blind Regions with Prior Knowledge Based Sound Localization

Jani Even, Satoru Satake, Takayuki Kanda, ICSR 2019

Read more...

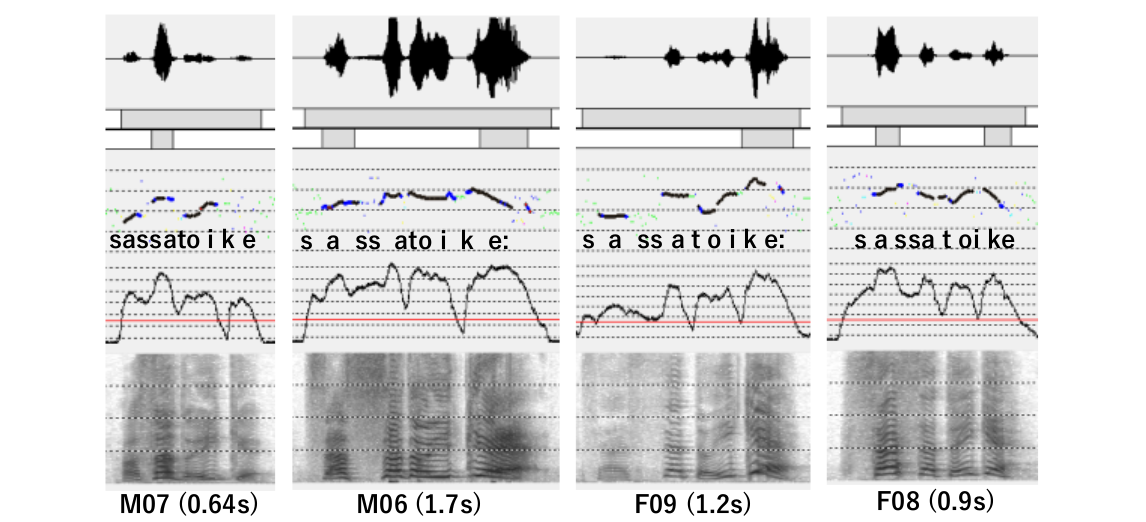

Prosodic and voice quality analyses of loud speech: differences of hot anger and far-directed speech

Carlos T. Ishi, Takayuki Kanda, SMM 2019

Read more...

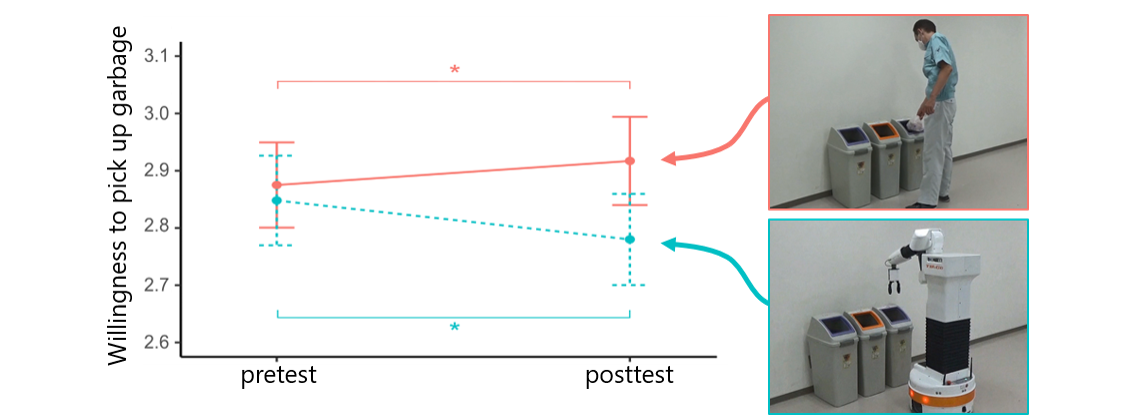

Influencing Moral Behavior Through Mere Observation of Robot Work: Video-based Survey on Littering Behavior

Risa Maeda, Dražen Brščić, Takayuki Kanda, HRI 2021

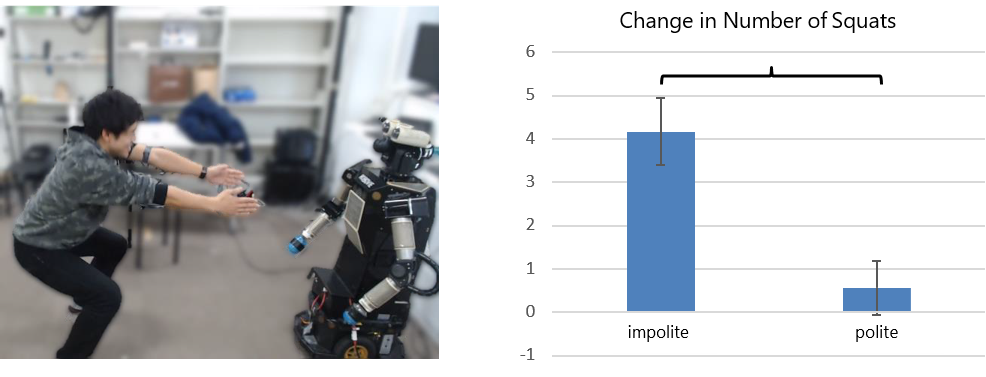

“Is this all you can do? Harder!”: The Effects of (Im)Polite Robot Encouragement on Exercise Effort

Daniel J. Rea, Sebastian Schneider, Takayuki Kanda, HRI 2021

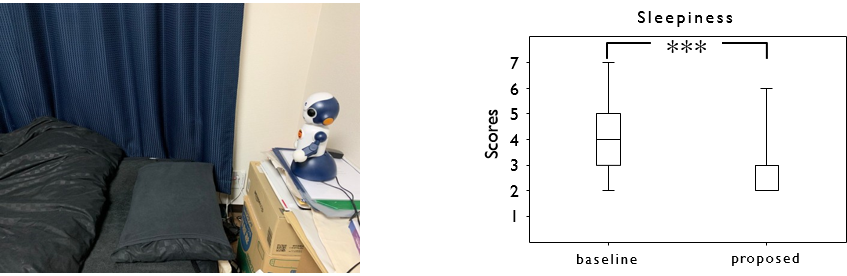

Wake up and talk with me! In-the-field study of an autonomous interactive wake up robot

Yuma Oda, Jani Even and Takayuki Kanda, ICSR 2020

The effects of assistive walking robots for health care support on older persons: a preliminary field experiment in an elder care facility

Tatsuya Nomura, Takayuki Kanda, Sachie Yamada, Tomohiro Suzuki, Intelligent Service Robotics, 2021

Will Older Adults Accept a Humanoid Robot as a Walking Partner?

Deneth Karunarathne, Yoichi Morales, Tatsuya Nomura, Takayuki Kanda, Hiroshi Ishiguro, IJSR, 2019

Walking partner robot chatting about scenery

Taichi Sono, Satoru Satake, Takayuki Kanda, Michita Imai, AdvRob, 2019

People’s V-Formation and Side-by-Side Model Adapted to Accompany Groups of People by Social Robots

Ely Repiso, Francesco Zanlungo, Takayuki Kanda, Anaís Garrell, Alberto Sanfeliu, IROS 2019

Model of Side-by-Side Walking Without the Robot Knowing the Goal

Deneth Karunarathne, Yoichi Morales, Takayuki Kanda, Hiroshi Ishiguro, IJSR, 2018

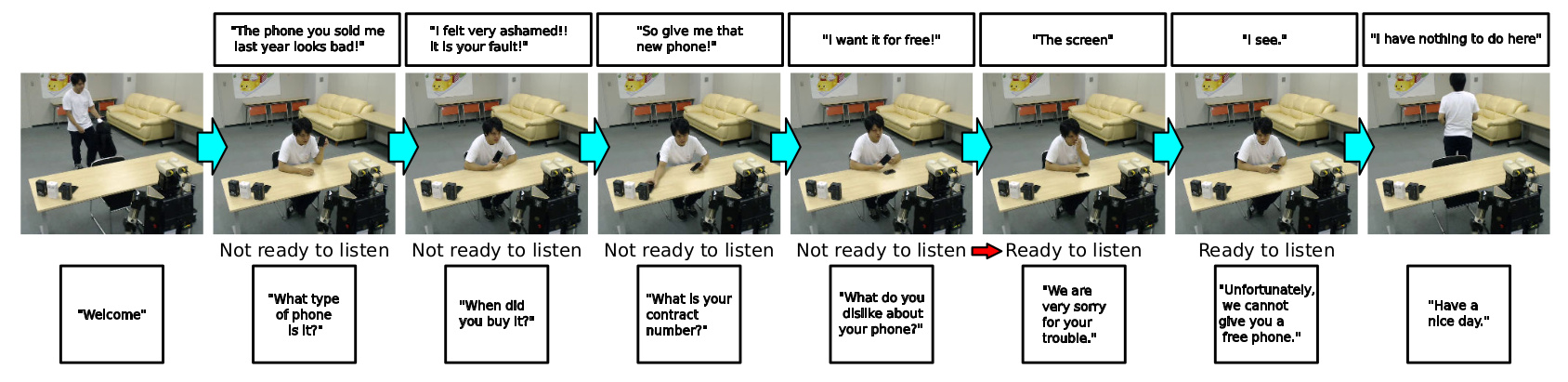

Can a Robot handle Customers with Unreasonable Complaints?

Daichi Morimoto, Jani Even, Takayuki Kanda, HRI 2020

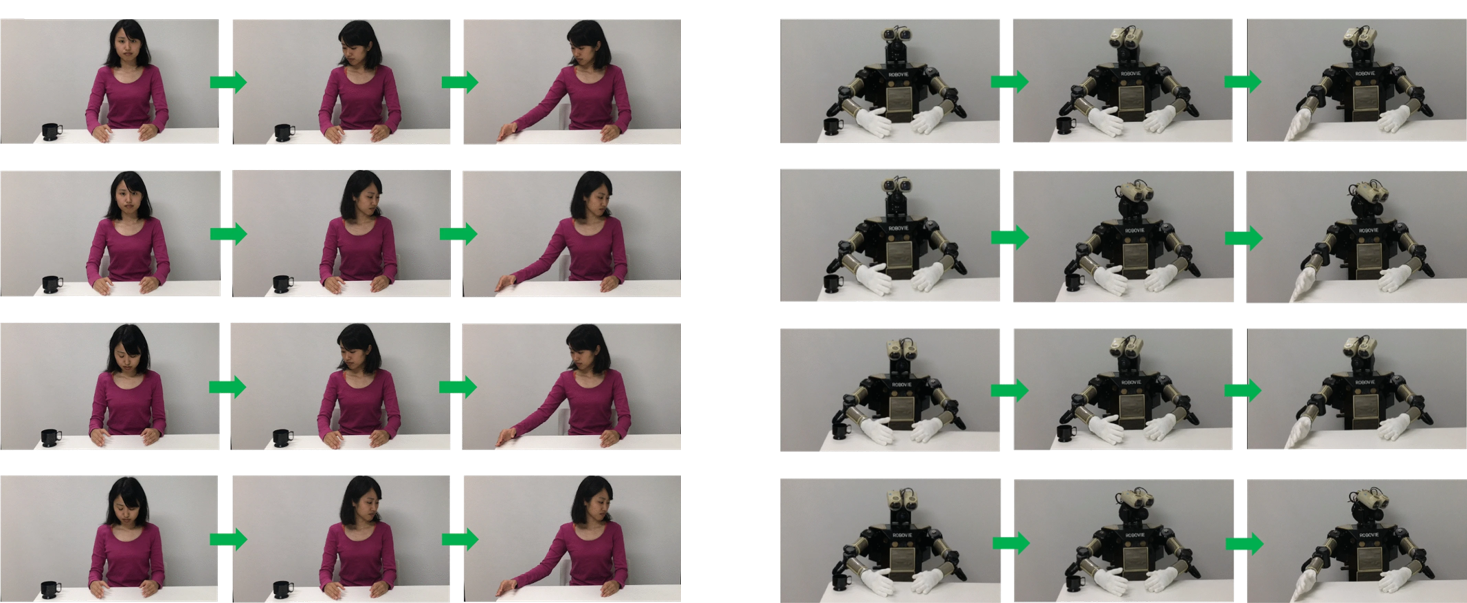

Can a Social Robot Encourage Children’s Self-Study?

Risa Maeda, Jani Even, Takayuki Kanda, IROS 2019

Parent Disciplining Styles to Prevent Children’s Misbehaviors toward a Social Robot

Jorge Gallego Pérez, Kazuo Hiraki, Yasuhiro Kanakogi, Takayuki Kanda, HAI 2019

The understanding of congruent and incongruent referential gaze in 17-month-old infants: an eye-tracking study comparing human and robot

Federico Manzi et al., Scientific Reports, 2020

Infants’ perceptions of cooperation between a human and robot

Ying Wang et al., InfChildDev, 2020

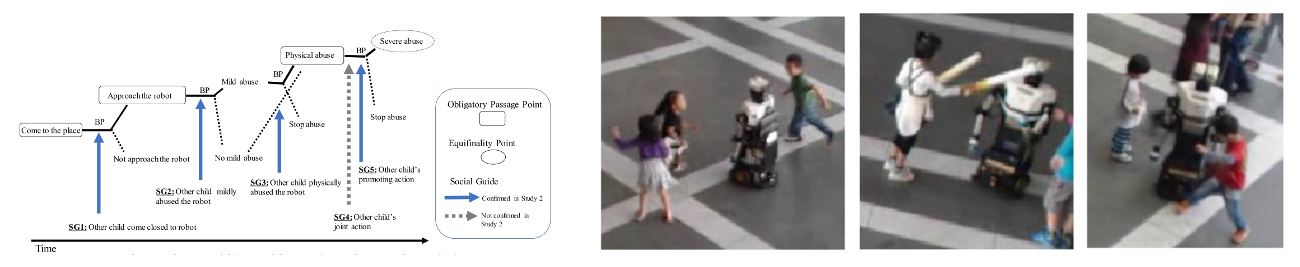

An Escalating Model of Children’s Robot Abuse

Sachie Yamada, Takayuki Kanda, Kanako Tomita, HRI 2020

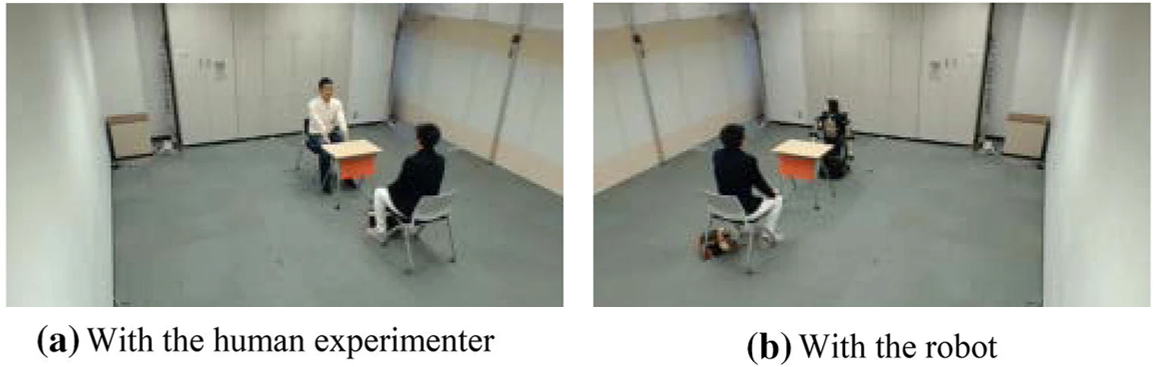

Do people with social anxiety feel anxious about interacting with a robot?

Tatsuya Nomura, Takayuki Kanda, Tomohiro Suzuki, Sachie Yamada, AI&Soc, 2020

Robostress, a New Approach to Understanding Robot Usage, Technology, and Stress

Kimmo J. Vänni, Sirpa E. Salin, John-John Cabibihan, Takayuki Kanda, ICSR 2019

Human Group Presence, Group Characteristics, and Group Norms Affect Human-Robot Interaction in Naturalistic Settings

Marlena R. Fraune, Selma Šabanović and Takayuki Kanda, Front. Robot. AI, 2019

Preliminary Investigation of Moral Expansiveness for Robots

Tatsuya Nomura, Kazuki Otsubo, Takayuki Kanda, ARSO 2018

Human-inspired Motion Planning for Omni-directional Social Robots

Ryo Kitagawa, Yuyi Liu, Takayuki Kanda, HRI 2021

Would You Mind Me if I Pass by You?: Socially-Appropriate Behaviour for an Omni-based Social Robot in Narrow Environment

Emmanuel Senft, Satoru Satake, Takayuki Kanda, HRI 2020

Approaching Strategy for a Robot to Admonish Pedestrians

Kazuki Mizumaru, Satoru Satake, Takayuki Kanda, Tetsuo Ono, HRI 2019

Read more...

How to Overcome the Difficulties in Programming and Debugging Mobile Social Robots?

Yuya Kaneshige, Satoru Satake, Takayuki Kanda, Michita Imai, HRI 2021

A Robot that Distributes Flyers to Pedestrians in a Shopping Mall

Chao Shi, Satoru Satake, Takayuki Kanda, Hiroshi Ishiguro, THRI, 2021

Data-driven Imitation Learning for a Shopkeeper Robot with Periodically Changing Product Information

Malcolm Doering, Dražen Brščić, Takayuki Kanda, THRI, 2021

Autonomously Learning One-To-Many Social Interaction Logic from Human-Human Interaction Data

Amal Nanavati, Malcolm Doering, Dražen Brščić, Takayuki Kanda, HRI 2020

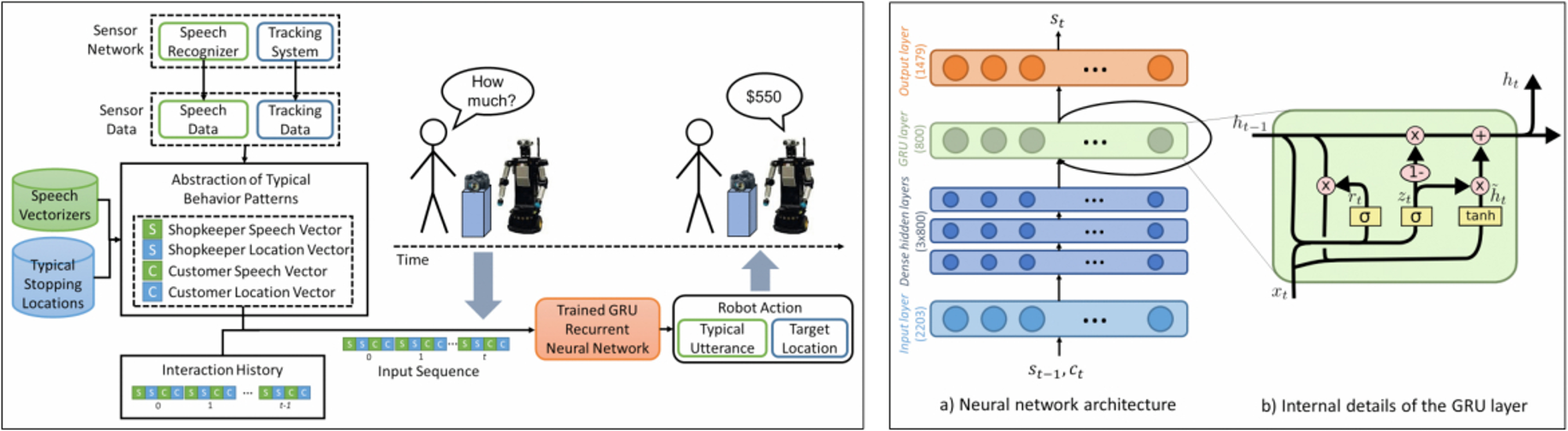

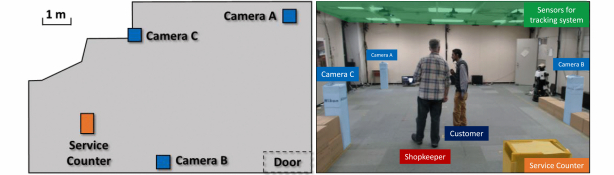

Neural-network-based Memory for a Social Robot: Learning a Memory Model of Human Behavior from Data

Malcolm Doering, Takayuki Kanda, Hiroshi Ishiguro, THRI 2019

Curiosity Did Not Kill the Robot: A Curiosity-based Learning System for a Shopkeeper Robot

Malcolm Doering, Phoebe Liu, Dylan F. Glas, Takayuki Kanda, Dana Kulić, Hiroshi Ishiguro, THRI 2019

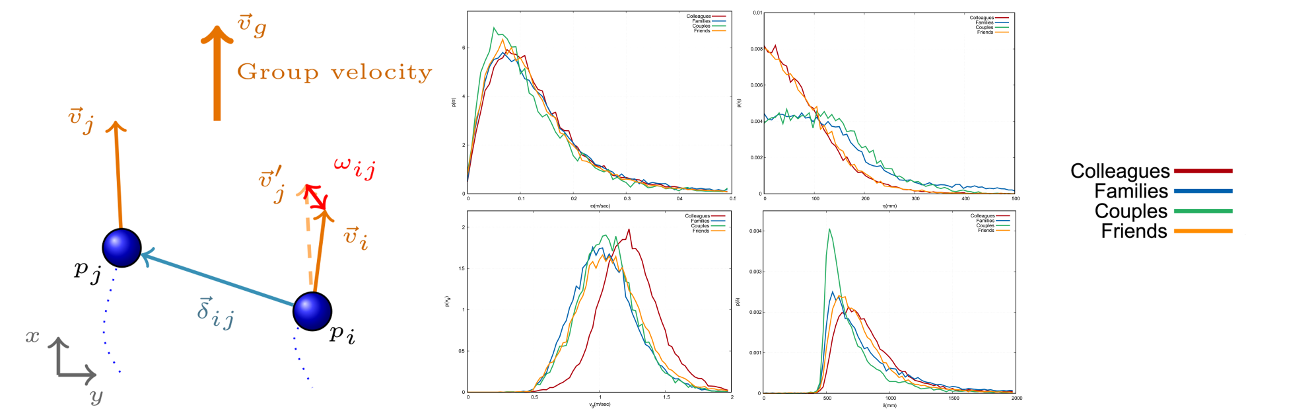

Identification of social relation within pedestrian dyads

Zeynep Yucel, Francesco Zanlungo, Claudio Feliciani, Adrien Gregorj, Takayuki Kanda, PLoS ONE, 2019

Intrinsic group behaviour II: On the dependence of triad spatial dynamics on social and personal features; and on the effect of social interaction on small group dynamics

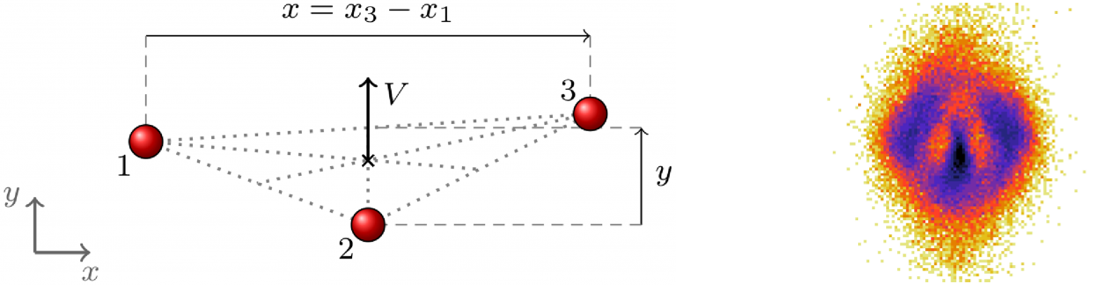

Francesco Zanlungo, Zeynep Yücel, Takayuki Kanda, PLoS ONE, 2019